ASTC Compression Accleration Using CUDA

Overview

ASTC is a fixed-rate, lossy texture compression system that is designed to offer an unusual degree of flexibility and to support a very wide range of use cases, while providing better image quality than most formats in common use today.

However, ASTC need to search a lot of candidate block modes to find the best format. It takes a lot of time to compress the texture in practice. Since the texture block compression is not relevant to each other at all, we can compress the texture parallelly with GPU.

In our project, we compress the texture with Cuda. The compression algorithm is based on the arm astc-enc implementation. It’s a CPU-based compression program. We port arm-astc to GPU and make full use of the cuda to acclerate the texture compression.

A naive implementation of GPU ASTC compression is compressing the ASTC texture block per thread, that is, task parallel compression. Since the block compression task is heavy and uses many registers, the number of active warps is low, which causes low occupancy. To make full use of the GPU, we use data parallel to compress the ASTC block per CUDA block. It splits the “for loop” task into each thread and shares the data between lanes by warp shuffle as possible as we can.

The astc-enc implementation has a large number of intermediate buffers during candidate block mode searching, which has little performance impact on CPU-based implementations, but has a significant impact on GPU-based implementations. We have optimized this algorithm by in-place update, which removes the intermediate buffer.

CPU Based Implementation

Bounded Integer Sequence Encoding

ASTC uses BISE to encode color end points and color weights. We introduce BISE first since it is the basis of the ASTC compression algorithm.

Both the weight data and the endpoint color data are variable width, and are specified using a sequence of integer values. The range of each value in a sequence (e.g. a color weight) is constrained.

Since it is often the case that the most efficient range for these values is not a power of two, each value sequence is encoded using a technique known as “integer sequence encoding”. This allows efficient, hardware-friendly packing and unpacking of values with non-power-of-two ranges.

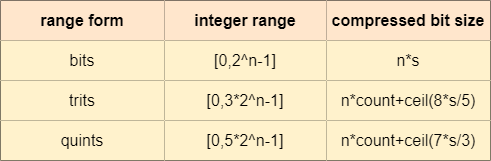

In a sequence, each value has an identical range. The range is specified in one of the following forms:

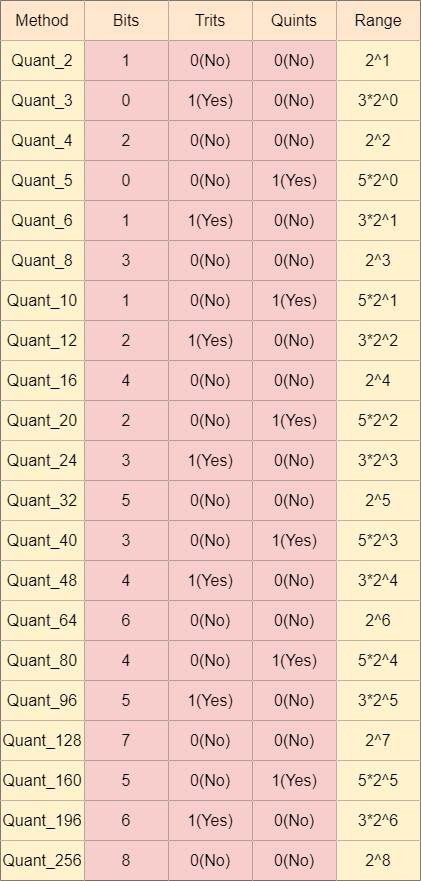

There are 21 quant methods in ASTC, including 6 quints quant forms, 7 trits quant forms and 8 bits quant forms.

For example, assume we have 3 integer values: 30, 50 and 70. The minimum range of quant formats among these values is Quant_80. The binary formats of these values are: 001 1110(30), 011 0010(50) and 100 0110(70). The total bits size before quantization is 21 = 7 * 3.

The binary format of value 80 is 101 0000. We split it into two parts, the higher parts 101 and lower parts 0000. The possible values of the higher parts are from 000 to 101, whose total number is 5, so Quant 80 is a quints form.

The higher parts of 30, 50 and 70 are [001, 011, 100]. In the Quant 80 method, the total possible number is 5, so the number of possible combinations of [001, 011, 100] is 5x5x5 = 125. We can precompute these possible values into a table and use the 7 bit value to index the table. The result is a 2 bit saving for these integers.

Higher parts [001, 011, 100] equal to [1,3,4]. Using index [1][3][4] to search the below quints table, we get the compressed value:11, which is 1011 in binary format. The compressed result is [000 1011](higher parts),[1110],[0010],[0110](lower parts) with size 19.

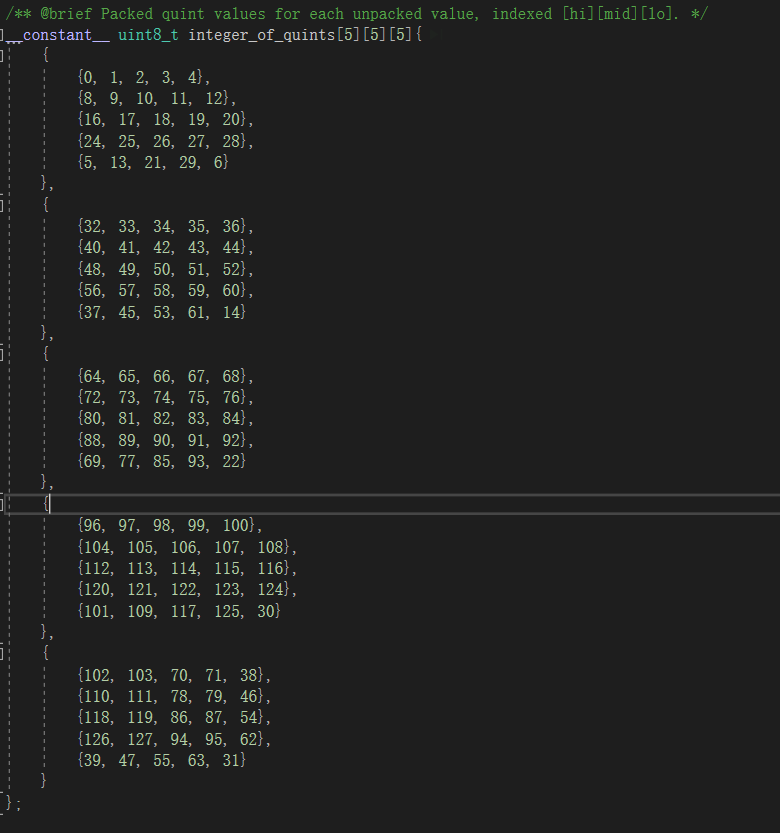

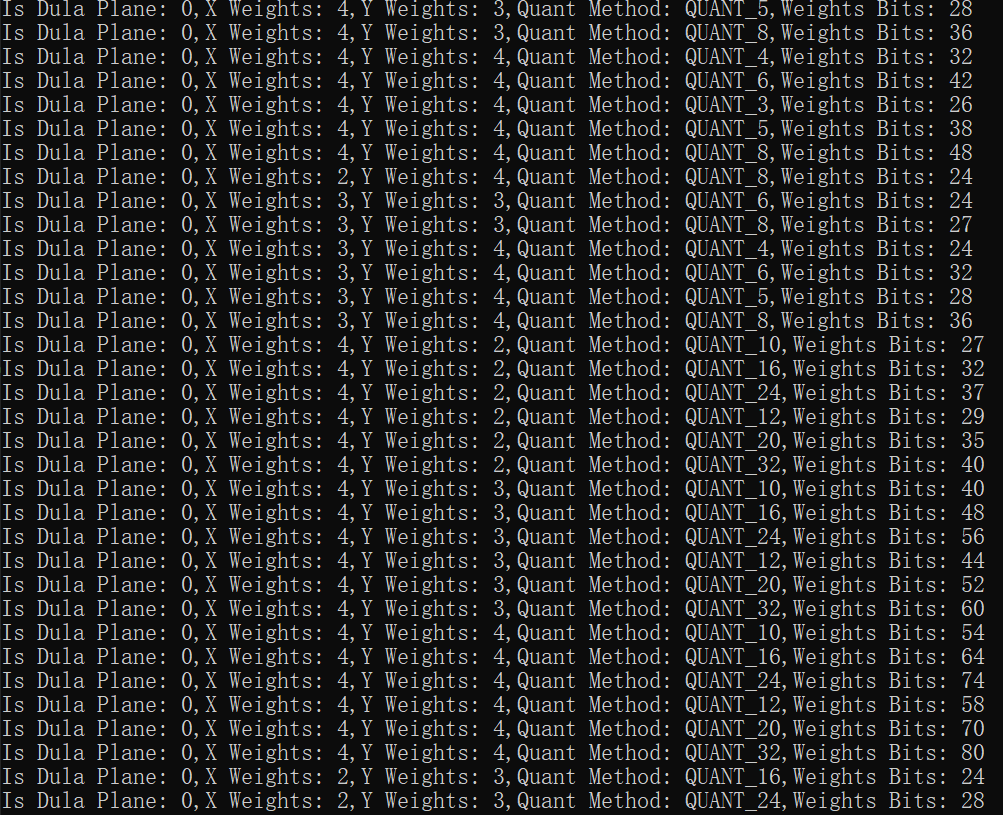

Generate Block Mode

ASTC uses 10 bits to store block modes, which means it has 2^11(2048) kind of possible choices. Given an ASTC compression format, some block modes may be invalid. For example, ASTC 4x4 compression format will never use a block mode with 6x6 texel weights. So we search for valid block modes and store the results in a global block mode table. In order to reduce the computation cost of block mode search, arm-astc reordered the block modes so that the better block mode has a higher priority.

Search block mode from 000000000(0) to 1111111111(2048).

1 | |

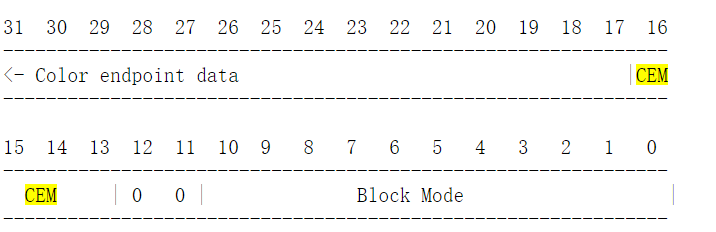

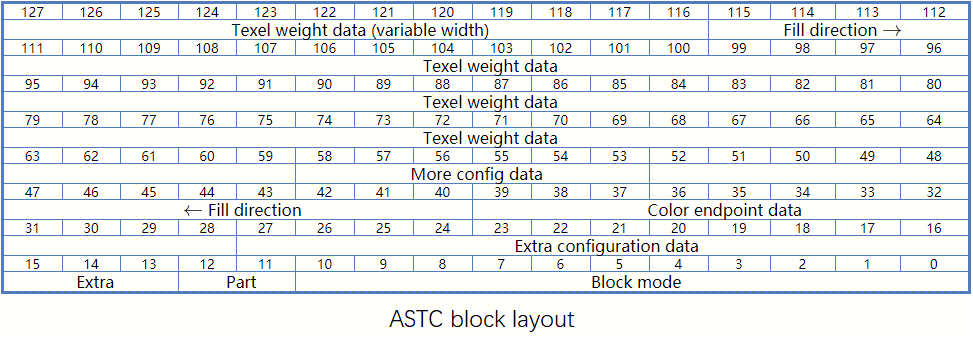

The Block Mode field specifies the width, height and depth of the grid of weights, what range of values they use, and whether dual weight planes are present.

For 2D blocks, the Block Mode field is laid out as follows:

The D bit is set to indicate dual-plane mode.

The A/B bits indicate the block weight size.

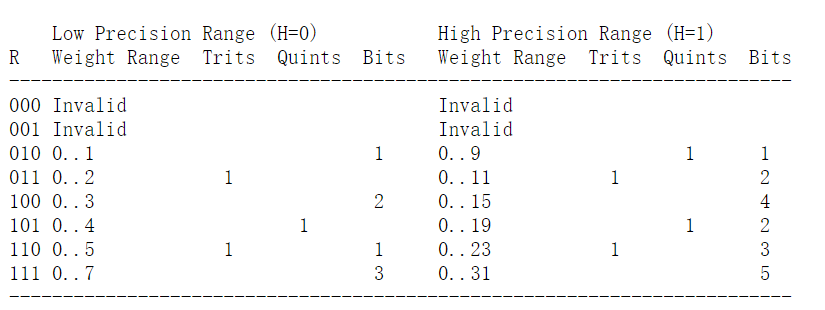

The weight ranges are encoded using a 3 bit value R(R0,R1 and R2), which is interpreted together with a precision bit H, as follows:

Decode the R,H,D, weight sizes x and y from the bits.

1 | |

Skip the block mode if the qunat weight size is larger than the compression block size.

1 | |

valid block mode:

Compute Ideal Color And Weights

Color Encoding

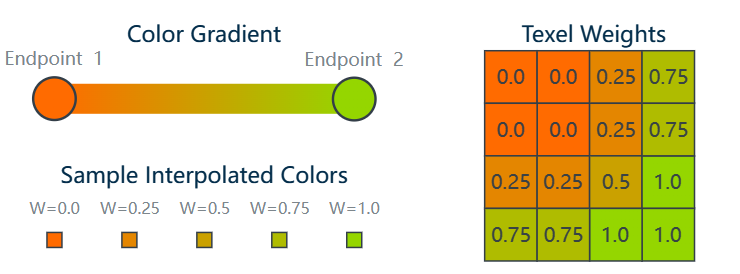

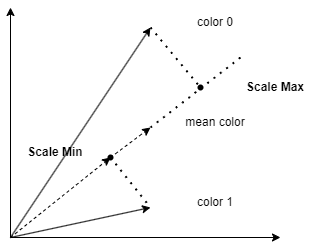

Each compressed block stores the end-point colors for a gradient, and an interpolation weight for each texel which defines the texel’s location along that gradient. During decompression the color value for each texel is generated by interpolating between the two end-point colors, based on the per-texel weight.

We sum up the relative colors in the positive R, G, and B directions and calculate the sum of direction lengths.

Endpoints Computation

Compute the mean color value and the main color direction first. There are many main direction calculation method. We use max accumulation pixel direction as the main direction, which is the same as the arm-astc implementation. We sum up the relative colors in the positive R, G, and B directions and calculate the sum of direction lengths.

1 | |

Use the maximum sum direction as the main direction

1 | |

Interpolation Weight Computation

Project the color into the main direction for each texel and find the minimum and maximum projected value by the way.

1 | |

Calculate the end points based on the min/max projected color.

1 | |

Normalize the weight range into 0 to 1:

1 | |

before color projection:

after color projection:

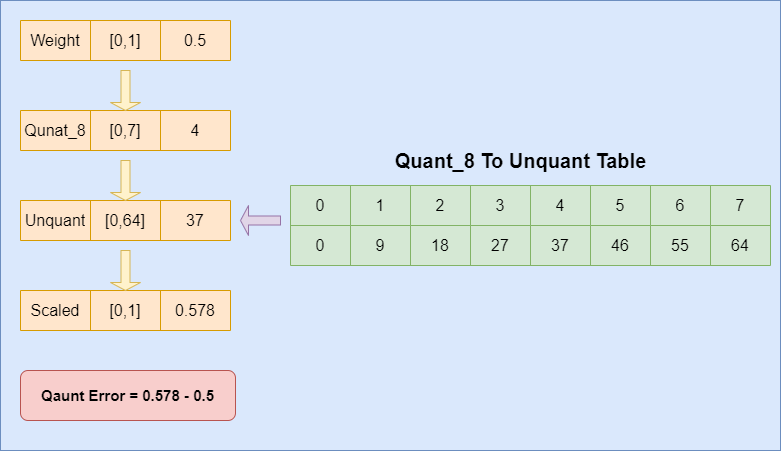

Compute Weight Quant Error

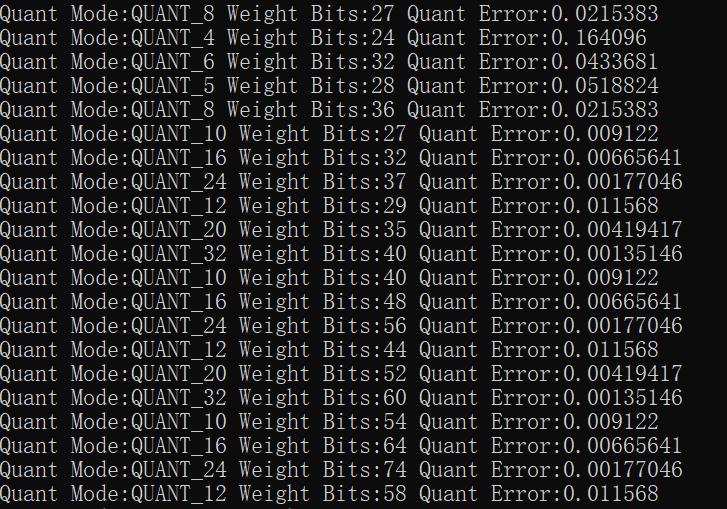

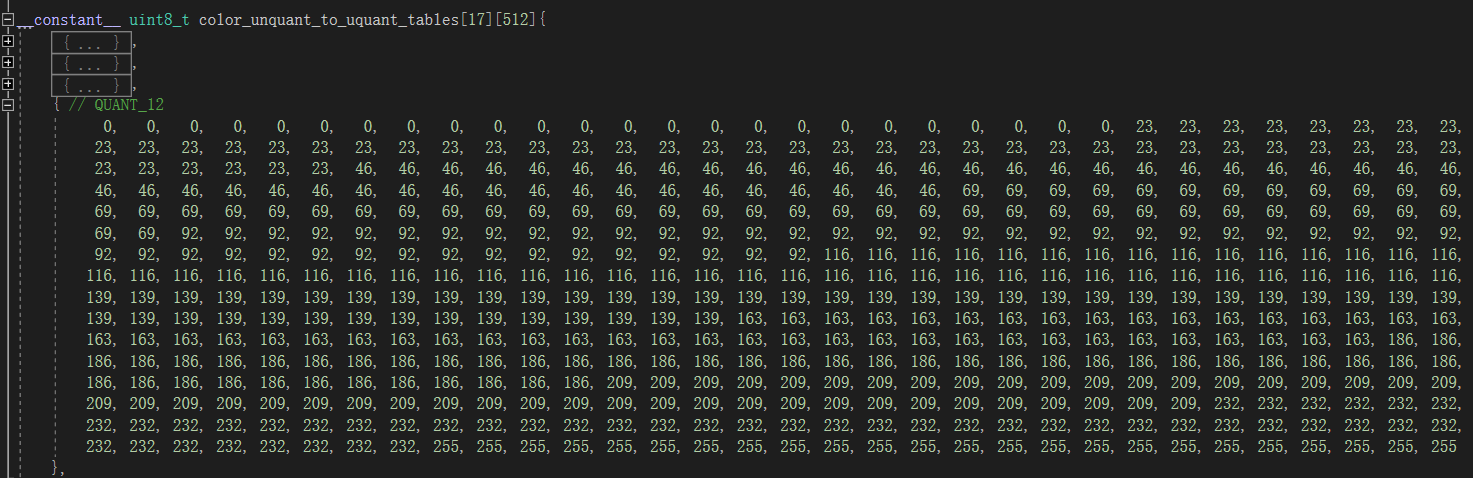

Compute the quant errors for each candidate block mode. Get the Quant method from the block mode and quantize the weights. After that, unquant the result by look up the precomputed quant map table. It should be noticed that the maximum color weight Quant method is Quant 32 and the maximum color end points Quant method is Quant 256.

Accumulate the weight quantization error for the texel weights in the block.

1 | |

weights quant error result:

Compute Endpoint Quant Error

The next step is to search for the best K candidate end point format as we have the quant error of each block mode.

CEM

CEM is the color endpoint mode field, which determines how the Color Endpoint Data is encoded. Here is the CEM layout for single-partition block layout:

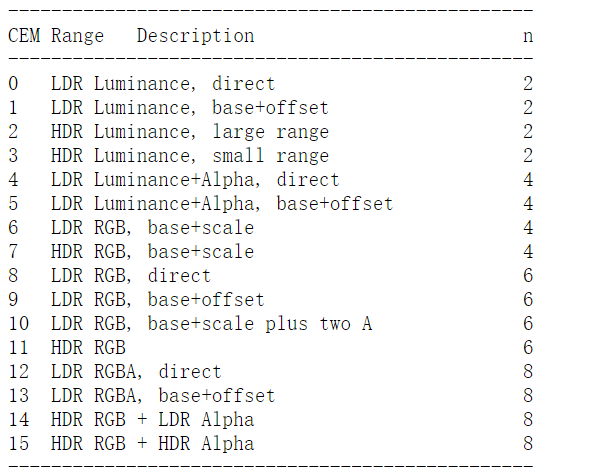

In single-partition mode, the Color Endpoint Mode (CEM) field stores one of 16 possible values. Each of these specifies how many raw data values are encoded, and how to convert these raw values into two RGBA color endpoints. They can be summarized as follows:

ASTC has 16 color end point modes. To store the end points, Modes 0 to 3 use two integers, Modes 4 to 7 use four integers, Modes 7 to 11 use six integers, and Modes 12 to 15 use eight integers. In our implementation, we only support six modes: mode 0, mode 4, mode 6, mode 8, mode 10 and mode 12.

Decode the different LDR endpoint modes as follows:

1.Mode 0 LDR Luminance, direct:

1 | |

2.Mode 4 LDR Luminance+Alpha,direct:

1 | |

3.Mode 6 LDR RGB, base+scale

1 | |

4.Mode 8 LDR RGB, Direct

1 | |

5.Mode 10 LDR RGB, base+scale plus two A

1 | |

6.Mode 12 LDR RGBA, direct

1 | |

Then, we estimate the error of each end point mode. Color end point modes can be classified into 3 types: luminance representation, scale representation and RGB representation. In astc-enc, the error estimation of luminance representation is the sum of the distances to vector normalize(lumi,lumin,lumin) = float3(0.57,0.57,0.57). Scale representation error estimation is the sum of the distance to vector normalize(EndPointA + EndPointB).

1 | |

Calculate and accumulate the scale and luminance error of each texel:

1 | |

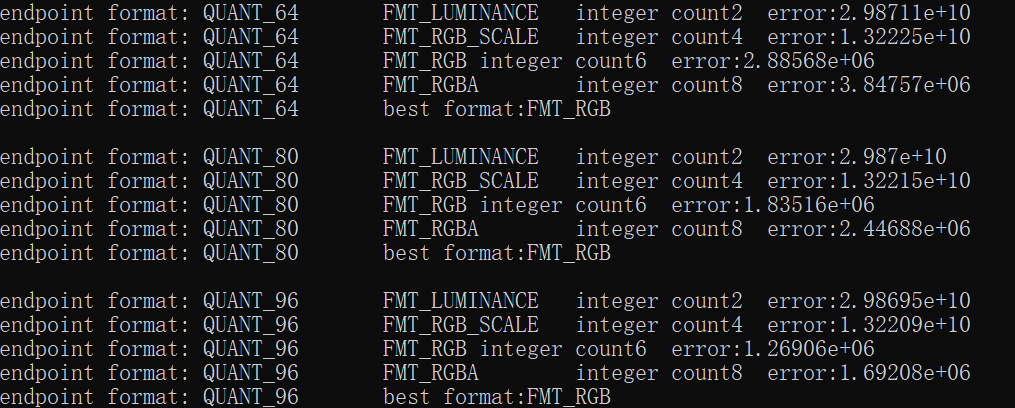

The endpoint encoding uses 21 quant levels and 4 kinds of integer numbers, resulting in a total candidate format count of 21 * 4. For each quant level, we choose the endpoint format for each kind of integer number.

The error estimation contains six parts: baseline quant error, base quant error RGB, base quant error RGBA, scale error, luminance error, drop alpha error.

1.Baseline quant error is precomputed in a look up table and indexed by quant level.

1 | |

2.In our implementation, the base quant error of each channel is the same. In astc-enc, the error of each channel can be adjusted by the user.

1 | |

3.Scale error, luminance error and drop alpha error are computed in the previous step.

4.The final error for each endpoint format is the combination of the above errors.

Take the example of computing the error for the endpoint format encoded by 4 integers using the quant method 7.

The error of mode <RGB base + scale> calculation formula is as follows:

rgbs_alpha_error = base quant error rgba * baseline quant error of quant method 7 + rgb scale error

The error of mode

full_ldr_rgb_error = base quant error rgb * baseline quant error of quant method 7 + alpha drop error

Select the format with the minimum error:

1 | |

Find The Best Endpoint Quantization Format

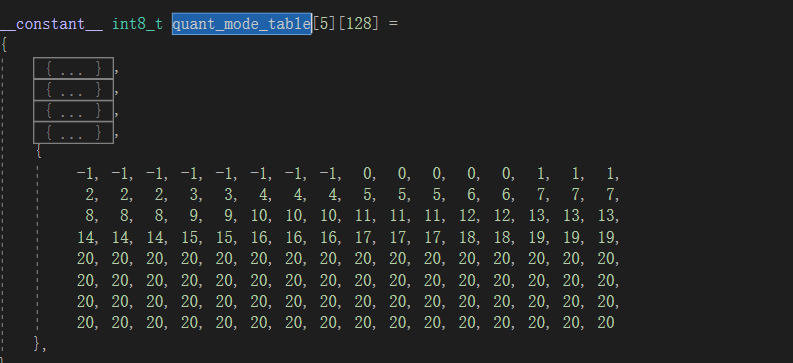

Search for all possible combinations of qunat level and color endpoint mode. We can obtain the available number of weight bits from the given block mode. Given the number of available bits and the number of integer, we want to choose the quant level as high as possible to minimize the quantization error. It can be precomputed in a lookup table offline:

The best quant level can be directly accessed from the lookup table at runtime:

1 | |

Store the best number of integers that has the minimum error given a block mode.

1 | |

best color endpoint quant format:

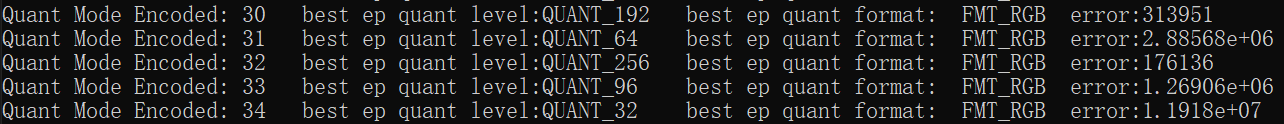

Find The Candidate Block Mode

The block mode total error is the sum of the errors that quantify the block texels weights and color endpoint.

1 | |

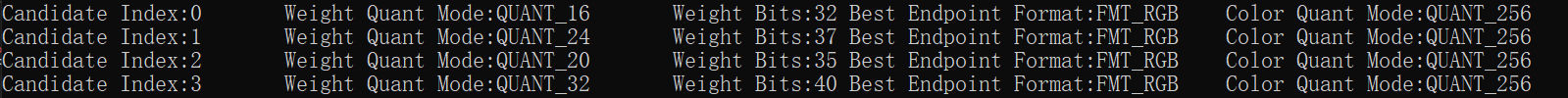

We only compute the rough estimation error up to the current step. The next step is to compute the exact error given a block mode. We need to compress and quantify the block texels for each block mode. Since it is an expensive process, we choose four candidate block modes based on the block mode estimation error.

For each candidate block mode search iteration, find the block mode with the minimum error combining weight quant error and endpoint quant error, record the candidate block mode index:

1 | |

Find The Actually Best Mode

Iterate over the 4 candidate block modes to find which one is actually best by quantifying the block and computing the error after unquantifying.

RGB Scale Format Quantification

As we quantize and decimate weights the optimal endpoint colors may change slightly, so we must recompute the ideal colors for a specific weight set.

RGB scale format contains two parts: the base endpoint and the scale factor.

1 | |

Compute the scale direction by normalizing the mean color and projecting the block texels to the scale direction. In addition, recording the min/max scale factor.

Compute the base color and scale factor based on the scale direction and scale maximum factor.

1 | |

Quantify Endpoints

Endpoint quantification is the same as interpolation weight quantification. There are only 17 possible quant levels and 255 possible values for each color channel. Therefore, the results can be stored in a precomputed table.

Error Metric

Given a candidate block mode, interpolate the texel color using quantized color endpoints and quantized weights. Compute the color difference and estimate the error using the squared error metric.

1 | |

Find the block mode with the minimum block error.

1 | |

Block Encode

Having found the best block mode with the minimum error, we can finally encode the block.

Below is a layout of the ASTC block. It contains the following parts in order: texel weight data, color endpoint data, color endpoint mode, extra data and block mode data.

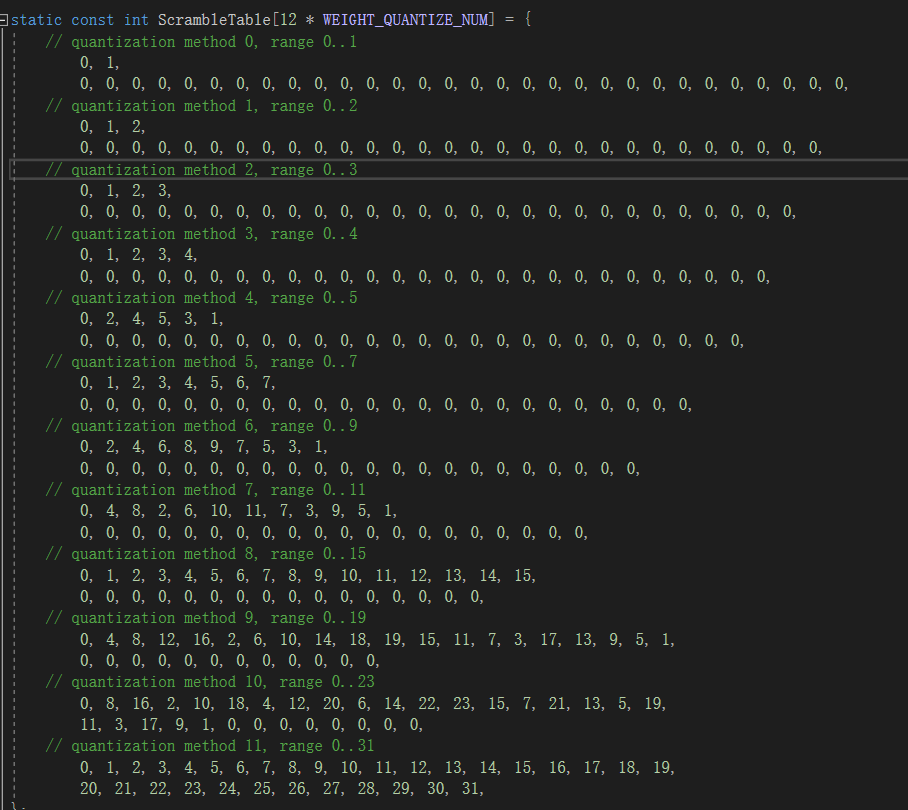

For texel weight, we scale the value based on the quant level of the best block mode. In order to improve the decoding efficiency, ASTC scrambles the order of the decoded values relative to the encoded values, which means that it must be compensated for in the encoder using a table.

1 | |

Once unpacked, the values must be unquantized from their storage range, returning them to a standard range of 0- 255.

For bit-only representations, this is simple bit replication from the most significant bit of the value.

For trit or quint-based representations, this involves a set of bit manipulations and adjustments to avoid the expense of full-width multipliers. This procedure ensures correct scaling, but scrambles the order of the decoded values relative to the encoded values. This must be compensated for using a table in the encoder.

The scramble map table is precomputed:

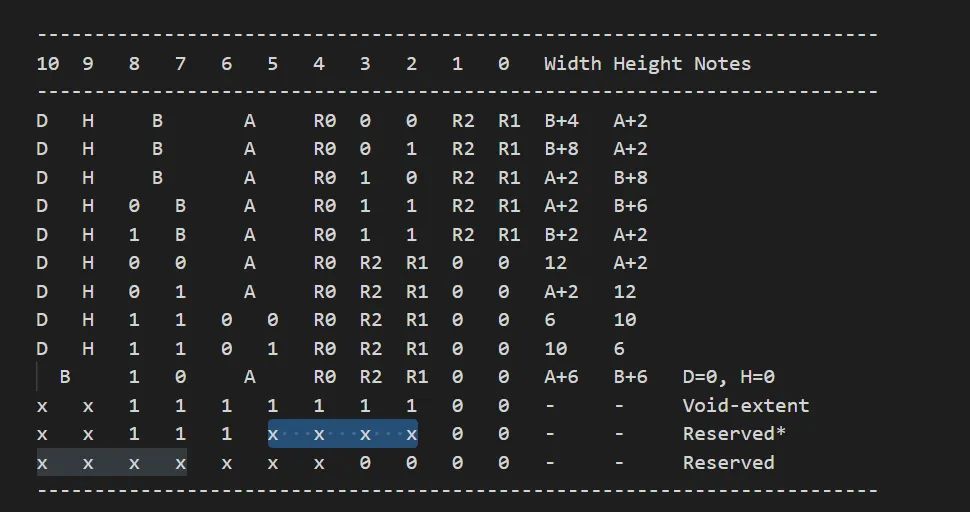

Next, encode the integer sequence based on the Quant method. Assume that we encode the integer sequence using the Quant_80 method. Quant_80 method quantifies the integer sequence in quints form. Since 5^3 is 125, it is possible to pack three quints into 7 bits (which has 128 possible values), so a quint can be encoded as 2.33 bits.

We split the integer into higher and lower parts and pack three integers’ higher parts into seven bits. The result is precomputed and stored in a lookup table.

1 | |

Then, pack the result with the lower part of the integer.

1 | |

The color endpoint packing is the same as the texel weight packing.

GPU Based Implementation

There are two ways to accelerate ASTC block compression: task parallel and data parallel. The task parallel is compressing the texture block per thread. The task parallel for ASTC block compression is heavy and uses many registers. This means that the number of active warps is low and we have low occupancy. Therefore, we can’t make full use of GPU for task parallel.

For data parallel, we compress the ASTC block per cuda block. The GPU-based implementation references the CPU implementation. It splits the “for loop” task into each thread and shares the data between lanes by warp shuffle as possible as we can. For those data that can’t be efficiently shared by warp shuffle, we use shared memory to exchange the data.

Compute Endpoints

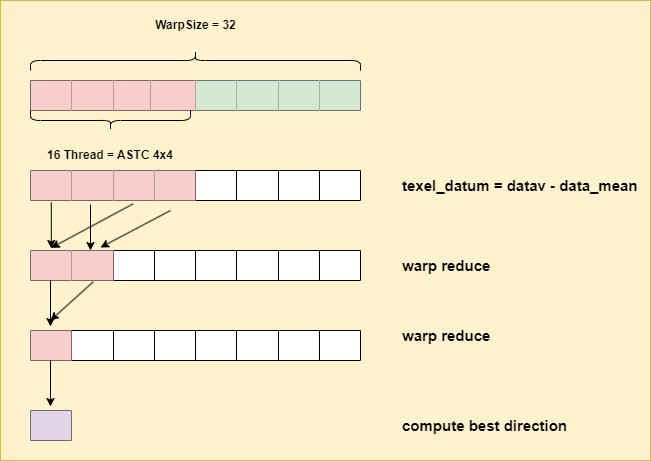

The first step is computing the best projection direction for the current block. Before this step, we load the image pixel data per thread and compute the mean pixel data by warp reduce sum operation. Then we broadcast the mean data to the whole warp.

Since the ASTC format 4x4 only has 16 texels to compress and the warp size on N-card is 32, we should mask the lanes used for block texels loading and sum operation.

1 | |

We use the max accumulation direction method to compute the best direction, which is the same as the CPU-based implementation. Each lane computes the offset direction relative to the mean block color. Then, we perform warp reduce to compute the sum of the offsets in the xyz direction.

If the lane ID is 0, compute and normalize the best direction based on the length of the sum of offsets in each direction.

1 | |

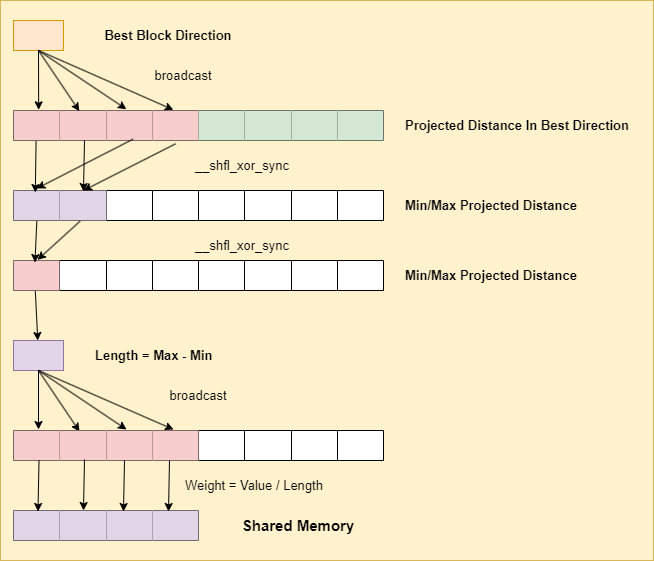

Compute the interpolation weight for each block texels. First, broadcast the best direction to the whole warp and project the texel data to the best direction. Then, use __shfl_xor_sync to compute the min/max value. With the min/max value, we can compute the scaled weight and store the result in shared memory.

Find The Candidate Block Mode

Our algorithm is based on the arm astc-enc implementation. However, we can’t port the astc-enc to Cuda directly. The astc-enc implementation has a large number of intermediate buffers during candidate block mode searching, which has little performance impact on CPU-based implementations, but has a significant impact on GPU-based implementations.

Here is a brief introduction to how astc-enc finds the candidate block mode:

1.Compute the quant error for each block mode and store the result and quant bits used in an intermediate buffer with the size of 2048 * (float + uint8)

2.For each block mode, compute the best combination with the candidate color quant format. This step has 3 intermediate buffers: best combination error buffer with the size of 2048xfloat, best color quant level buffer with the size of 2048xuint8, best endpoint format with the size of 2048xuint.

3.Choose 4 best candidate block mode and compute the more accurate error.

4.Compress the block using the best block mode

A lot of memory is wasted on the intermediate buffer. We have optimized this algorithm by in-place update, which removes the usage of the intermediate buffer:

1.Maintain a buffer recording the 4 candidate quant formats.

2.Iterate the block mode, compute the interpolation weight quant error, the best combination with the color quant format.

3.Compare with the candidate quant format stored in the step 1. Replace the candidate mode that has a larger error to current quant format.

4.Other steps are the same as the astc-enc implementation.

Prepare

Before iterating 2048 candidate block modes, we need to prepare some infomation used in block quant error computation.

The first one is the color endpoint format error for scale-based color endpoints or luminance-based color endpoints. We compute the errors for each block pixel and sum up the error by warp reduction. Then store the results in shared memory.

1 | |

Color endpoint has 21 quant methods and 4 kind of interger number to quant with total 21*4 possible combinations. We precomputed the result before block mode iteration. Each thread computes one error for one quant method and stores the result in shared memory.

1 | |

Block Mode Iteration

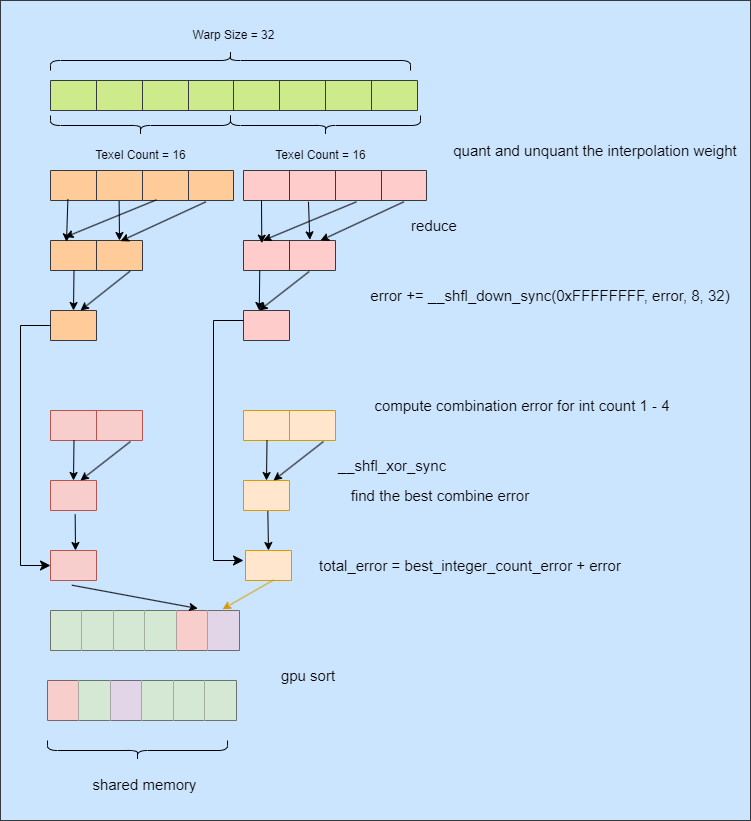

To make full use of the GPU, we process 2 block modes for each block mode iteration. Each processed block mode is handled by 16 threads, which is half the size of the warp.

1 | |

In the arm astc-enc implementation, weight quant errors are computed separately from color endpoint quant errors. The process generates a lot of intermediate buffers. To optimize intermediate buffer usage, we combine the separate passes together and update the total error in-place.

We compute the difference between quanted weights and unquantified weights per thread. The quant error of the block mode is computed by summing the squared texel error using warp reduction.

For each block mode, dispatch four threads to compute the combined error for four endpoint quant formats: integer number 1 to integer number 4. Then, use __shfl_xor_sync to find the best endpoint quant format with minimum error.

We maintain a shared candidate block mode buffer with 4 actual candidate block modes and 2 block modes updated during each iteration. The last two block modes (4 + 0 and 4 + 1) are used for final GPU sorting.

1 | |

When current block mode iterations have been completed, perform a GPU sorting. The first four candidate block modes are used in the next pass.

1 | |

Find The Best Block Mode

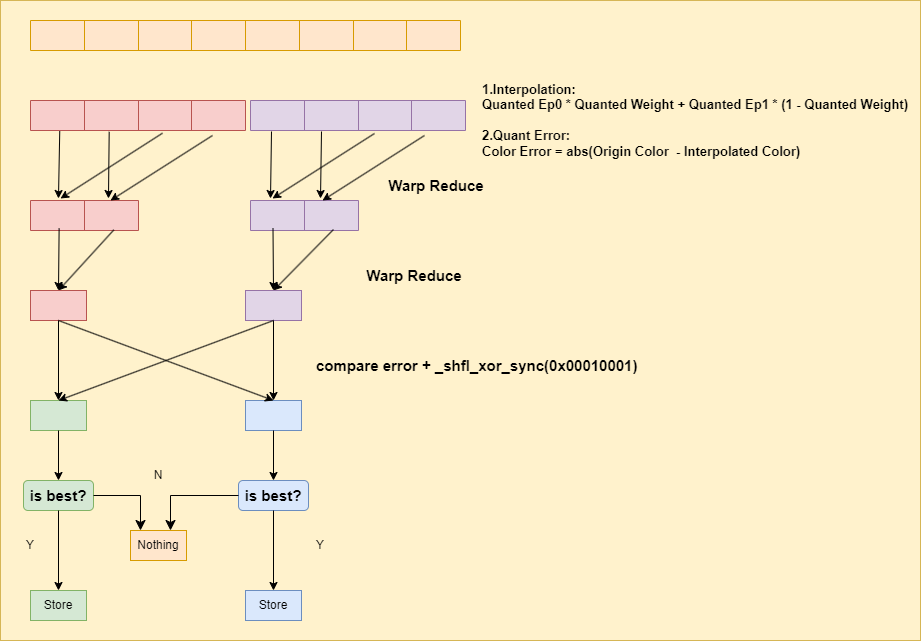

We get four block mode candidates after all block mode iterations are complete. However, to accelerate block mode seraching, the candidate block modes are selected by approximate error instead of the actual difference between the original color and the compressed color. So, in the current pass, we quantify the interpolation weights and color endpoints and compute the exact difference between compressed color and original color.

The exact best block mode is stored in shared memory that will be used in the final block mode compression.

Compress the block mode

The final block mode compression is the same as the CPU-based implementation.

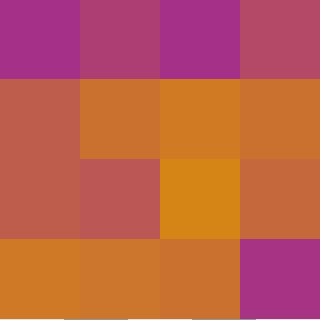

after astc compression:

before astc compression: